How DataOps Can Turn Your Dirty Data into Operational Gold

Every business has data. What separates the winners from the rest is what they do with that data. Dirty, inconsistent, slow data is like trying to drive with the parking brake on. It drags you down. But with DataOps, you can release that drag, turn chaos into clarity, and put your data to work in ways that deliver real business gains.

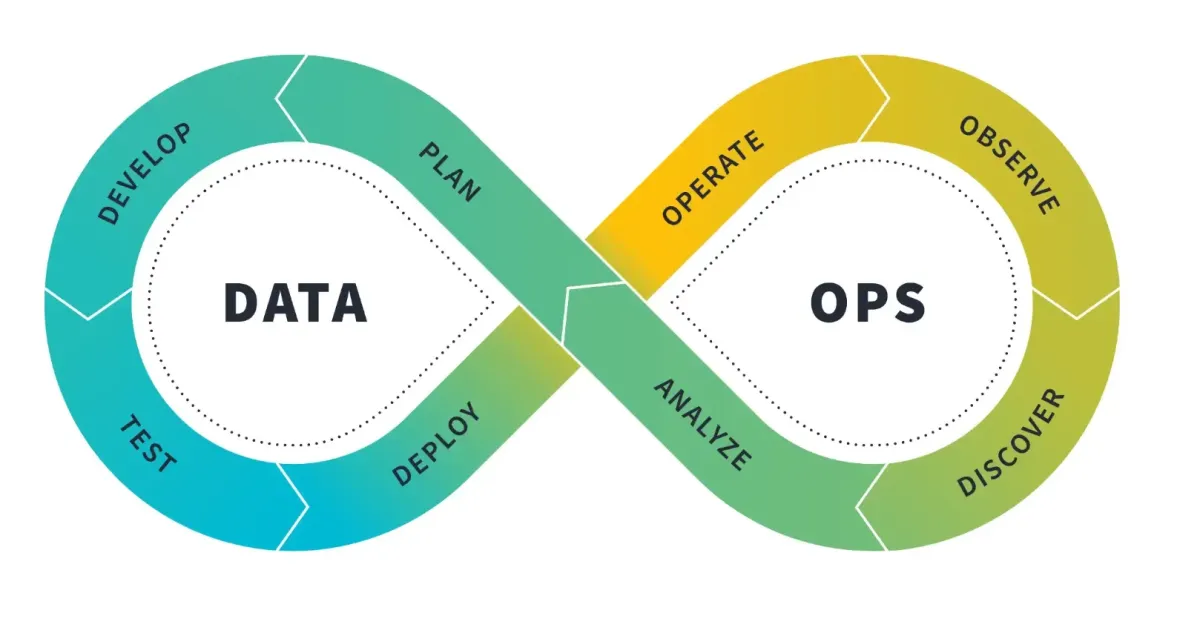

What is DataOps?

DataOps (short for “Data Operations”) is a set of practices, processes, and tools that combine elements of Agile, DevOps, quality engineering, and lean thinking, all oriented around delivering high-quality, timely data to users.

It’s about improving speed, quality, collaboration, and automation so your data pipelines aren’t brittle, error-prone, or slow. IBM describes it as a collaborative data management practice that automates parts of the data lifecycle, improves monitoring, and ensures reliability.

Why “Dirty Data” is Holding You Back

Inconsistent data formats, missing values, duplicates, stale records → they lead to wrong decisions, wasted work, and mistrust in reports.

Slow feedback loops: when cleaning or fixing data is manual and reactive, you spend more time diagnosing than using.

Poor visibility: errors hidden until too late, difficult to trace lineage or source of problems.

Siloed teams: separate departments not sharing practices or standards → inconsistent definitions, metrics, or pipelines.

How DataOps Transforms Dirty Data into Gold

Here are the levers you can pull when adopting DataOps:

Automated Testing & Monitoring: Build tests for data correctness, schema, completeness; monitor pipelines with alerts when something goes wrong. This catches issues before they cascade.

Continuous Integration / Continuous Delivery for Data Pipelines: Just like in software, you want your changes to data ingestion, transformation, etc., to be versioned, tested, and deployable in safer increments. Enables faster iteration.

Collaboration Across Roles: Analysts, engineers, operations, business users all communicating terms, definitions, standards. Shared ownership reduces delays and misalignment.

Automation Where Possible: Automate mundane or repetitive tasks (data cleaning, duplicates detection, standardization). Free people to focus on insights, strategy.

Lean & Agile Practices: Short cycles, iterative improvements, incremental delivery. You don’t need perfect pipelines from day one—deliver usable fast, then refine.

Real-World Example

Imagine a retail company that had marketing, operations, and finance using the same sales data but each team seeing different numbers. Discrepancies came from:

Sales data arriving in multiple formats (CSV, API)

Duplicate records for same customer tied to different identifiers

Outdated category tags on products that weren’t aligned across systems

They adopted DataOps:

Created a shared glossary/definitions so everyone agreed on what a “sale,” “customer,” “active product” etc. means.

Built tests to check for missing values, duplicate customer IDs, mismatched product categories.

Automated cleaning steps early in ingestion (normalize formats, map old tags to new).

Set up monitoring dashboards that alert when error rates exceed a threshold.

Outcomes: fewer mismatches, faster reports, people trusted dashboards more, decision latency went down. What used to take a week to reconcile across teams now took hours.

How to Begin Implementing DataOps

Here are initial steps:

Assess current pain points: where are delays, errors, rework in your data pipelines?

Establish clear definitions / data standards with stakeholders.

Identify low-hanging automation opportunities (cleaning, validation, duplicate detection).

Introduce testing and monitoring in the pipeline: alerts, logs, dashboards.

Encourage collaboration: regular syncs across analytics, engineering, business teams.

Challenges & What to Prepare For

Resistance to change: people used to old ways will push back. Needs leadership support and gradual roll-outs.

Tooling & infrastructure costs: some automation and monitoring tools have overhead. Balance investment vs benefit.

Keeping standards alive: definitions might drift; need governance and periodic review.

Complexity of legacy data: old systems, messy histories: cleaning might be messy, take time.

DataOps isn’t a luxury—it’s how you turn dirty, slow, and inconsistent data into an asset. When done well, it makes your operations sharper, your insights more trustworthy, and your decision-making faster. If Bytelock Solutions helps you implement DataOps, we don’t just clean your data—we build systems so your data stays clean, useful, and powerful.